System Architectures

GSWHC-B Getting Started with HPC Clusters \(\rightarrow\) K1.1-B System Architectures

Relevant for: Tester, Builder, and Developer

Description:

- You will learn about the hardware components of an HPC cluster and their functions (basic level)

This skill requires no sub-skills

Level: basic

HPC Cluster Architecture

This is an introductory description of HPC cluster hardware and operation. You will probably find that much of this text is valid for the cluster you want to use, too. Since every cluster is different, you should look at the description of the configuration of your cluster in addition.

An HPC cluster is built out of a few to many servers that are connected by a high performance communication network. The servers are called nodes. HPC clusters use batch systems for running compute jobs. Interactive access is usually limited to a few nodes.

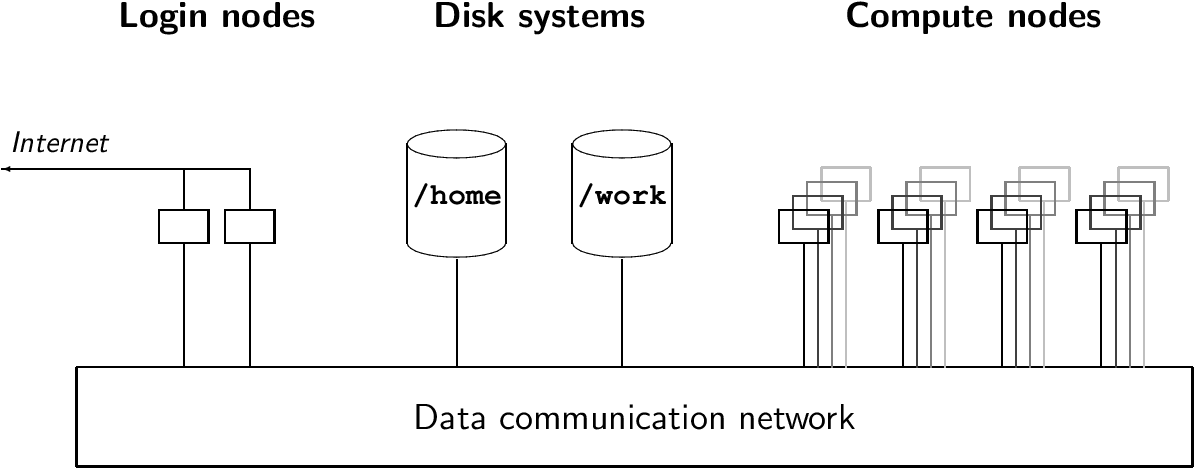

In the Figure below the architecture of an HPC cluster is sketched. The Figure shows hardware components that basically all clusters have. In the following text the functions of these components, and others, are explained.

Nodes

There are nodes with several functions:

Login or gateway nodes. This is the node that you are on after logging into the cluster. There can be more than one of such nodes. On many clusters this is the node on which you can work interactively, i.e. where you compile programs, prepare and manage batch jobs. It can be that this node is configured only as a gateway. Then you have to hop to (i.e. log into) another node that is configured for interactive work.

Compute nodes are the workhorses of a cluster. Batch runs are being executed there. A standard compute node has CPUs and main memory. It can be equipped with additional or special compute devices (e.g. GPUs, vector cards or FPGAs). The number of CPUs and the main memory size vary. Many clusters run diskless, i.e. their compute nodes have no (local) disk. Local disks are nice to have for heavy scratch I/O. However, spinning disks are components that fail relatively often, and it is not economic to homogeneously put disks into each node if only a few applications need scratch I/O. Compute nodes can be made available exclusively (not-shared) to a batch job or they can be shared by more than one job.

Admin or system nodes. These nodes are mentioned for completeness. They work in the background and are necessary for the operation of the cluster, e.g. for running the batch service, or starting and shutting down compute nodes.

Disk nodes provide global file systems, i.e. file systems that can be used on all other kinds of nodes. Several nodes can provide a single file system. The exact mechanism is hidden from the user. The user just sees the file system and does not need to know of the nodes providing it.

Special nodes. The node types mentioned above are common on any cluster. In addition there can be special nodes, e.g. for data movement, visualization, or pre- and post-processing of large date sets.

Head node. The term head node is not used consistently. It can mean login or admin node. In both cases head means management (either by a user or an administrator).

Global file systems

Global file systems are available on all nodes of the cluster (in particular on login and compute nodes). Global file systems are convenient because their files can be accessed directly on all nodes. This functionality is known from network file systems. Additional functionality is provided by parallel file systems. Quantitatively, parallel file systems offer higher I/O performance than classic network file systems. Qualitatively, they allow several processes to write into the same file.

Communication network

The communication network has two purposes. It enables high speed data communication for parallel applications running on multiple nodes, and it provides a high speed connection to the disk systems in the cluster. Performance of the communication network is designed according to the demands of the parallel applications running on the machine and the I/O requirements. If performance requirements are low, a cluster can be built without a high performance network, just with an ethernet network. In addition to the communication network, that is used by applications, there can be additional networks that are used for system purposes (e.g. for managing nodes).

Connections to the internet

Usually, only the login/gateway nodes can be reached from the outside. Some clusters have data mover nodes that are reachable as well.

It depends on the policy of the computing center which nodes can connect to the internet from inside the cluster.